DGPT is an AI innovation platform that helps GPU idle computing power to generate profits.

Company Background

Company name: DGPT Corporation Inc.

Platform name: DGPT-Decentralized Arithmetic Profit Platform

Company Background

DGPT was founded in 2021 by a group of technology elites who are passionate about Artificial Intelligence (AI). The company officially established itself in 2023 with the aim of driving the intelligent transformation of enterprises. Leveraging its rich industry experience and leading-edge technology, the company has become a pioneer and leader in the enterprise-level AI field. DGPT Corporation is focused on providing platform-centric full-stack AI solutions.

Among them, the DGPT platform is one of the company’s decentralised computing power income platforms, which is dedicated to solving the problem of insufficient computing power demand faced by enterprises in their intelligent transformation. It aims to make full use of and decentralise the idle computing power of individuals and enterprises, while helping them to earn revenue by leasing out this idle computing power as an innovative platform.

DGPT can gather various arithmetic resources such as personal mobile phone mobile devices, computers, servers, data centres, etc. Users only need to access their idle arithmetic to the platform to earn revenue easily. For users who need a large amount of arithmetic power, they can rent arithmetic power through the platform to complete all kinds of computing tasks, such as AI model training, scientific research calculations, cryptocurrency mining and so on.

The DGPT platform is an innovative decentralised arithmetic revenue platform. Through carefully designed distributed architecture and algorithms, we have successfully decentralised arithmetic, effectively reducing the risks associated with centralisation, while improving the stability and reliability of the system. This unique design makes DGPT platform the leading decentralised arithmetic revenue platform in the market.

DGPT Corporation Inc’ decentralised computing products and services are now widely used in finance, retail, manufacturing, energy and power, telecoms and healthcare. Leveraging the company’s Transformer training and comprehensive array of AI computing products, as well as its leadership in AI computing solution delivery capabilities, DGPT Corporation Inc. has successfully set industry trends that have guided and facilitated the growth of the entire enterprise AI computing power space.

In this trend, DGPT Corporation Inc. not only has a comprehensive array of AI computing products and leading Transformer training technology, but also has accumulated rich experience and technology in hardware and software integration, big data processing, and deep learning. Our full-stack AI computing solutions, from chips, boards, complete machines to platform software, are built on these technologies and experiences.

Cooperative Partner

DGPT Corporation Inc. has an extensive network of global partners, including industry-leading companies such as Microsoft, Apple, Facebook, Goldman Sachs, Morgan Stanley, American Express, McKinsey, Google, Nvidia and Amazon. Nvidia, Alibaba Cloud, Tencent, and Amazon. These partners play key roles throughout the industry chain, from technology development to marketing, and are working with DGPT Corporation Inc. companies to advance enterprise AI technology. These partners are not only limited to hardware vendors and service providers, but also include a variety of areas such as R&D collaboration, shared resources and market expansion. Below are some of our partners:

1. Google: As the world’s leading search engine and cloud service provider, Google and XX Technology have a deep cooperation in the field of cloud computing and artificial intelligence, jointly developing and promoting a series of efficient and innovative AI solutions.

2. Nvidia: As one of the world’s largest producers of graphics processors (GPUs), Nvidia provides DGPT Corporation Inc. companies with high-performance hardware that enables us to deliver outstanding AI computing services.

3. Amazon: as one of the world’s largest e-commerce platforms and cloud service providers, Amazon and DGPT Corporation Inc. have extensive collaboration on AI and cloud computing.

4. Microsoft: Microsoft is a globally renowned technology company, and we have deep cooperation with Microsoft in a number of areas, including cloud services, AI technology and enterprise solutions.

5. Apple: As one of the world’s largest technology companies, Apple has a broad reach across consumer electronics, software and cloud services. DGPT Corporation Inc. companies have partnered with Apple in a number of areas to advance technology.

6. Facebook: Facebook is the world’s largest social networking platform, and we share data and resources with Facebook to explore the application of AI technology in social networking.

7. Goldman Sachs: Goldman Sachs is one of the largest investment banks in the world, and we have deep collaboration with Goldman Sachs in areas such as financial AI and big data analytics.

8. Morgan Stanley: Morgan Stanley is a globally recognised financial services company with whom we have worked extensively in areas such as fintech and smart investing.

9. American Express: American Express is one of the largest payment and credit card companies in the world, and we work closely with American Express in areas such as AI-driven payment technology and intelligent risk control.

10. McKinsey & Company: McKinsey is the world’s best-known consulting firm, and we’re working with McKinsey to explore how AI technology can be applied to enterprise consulting and decision analysis.

Natural Endowments

DGPT Corporation Inc. strictly adheres to all relevant industry and regulatory standards and has been awarded several compliance licences. These licences are further proof of DGPT Corporation Inc.’ professionalism in the AI field and the reliability of its products and services. DGPT Corporation Inc. has been strictly adhering to industry and regulatory standards and has obtained several certifications and qualifications within the industry:

1. ISO 27001: This is an international standard that sets out the requirements for an information security management system, demonstrating the high priority and professional management of information security.

2. ISO 9001: This is an international standard that defines the requirements for a quality management system and certifies our continuous pursuit of product and service quality.

3. AI Safety Certification: This is a specialised certification for AI products and services that shows that our products and services follow the highest standards in AI ethics and safety.

Centre of Competence

In order to better serve our customers around the world, DGPT Corporation Inc. has set up multiple computing centres around the world, including in Singapore, the United States and the United Kingdom. These centres are equipped with state-of-the-art hardware and software facilities to ensure stable and efficient AI computing services for customers. To meet the global demand, DGPT Corporation Inc. has set up arithmetic centres in several countries and regions, including:

1. Singapore: Located in the heart of Asia, providing fast and efficient service to customers in Asia.

2. USA: We have arithmetic centres in several major US cities such as San Francisco, New York and Los Angeles to cater to the North American market.

3. UK: The Arithmetic Centre in London provides easy access to European customers.

4. Other regions: We have also set up arithmetic centres in a number of countries and regions, including Australia, India and Brazil, to ensure that we can provide the best possible service to our customers worldwide.

Product and Service Offerings

Decentralised arithmetic leasing platform

DGPT Corporation Inc. is committed to developing more new technologies and products to meet the rapidly changing market demands. The company has a professional R&D team who upholds the spirit of innovation and is committed to promoting the development of AI technology to meet the needs of future intelligent transformation of enterprises. Meanwhile, DGPT Corporation Inc. Company also focuses on corporate social responsibility and actively participates in various public welfare activities to give back to the society.

Background and problem statement

With the continuous development of Artificial Intelligence AI, we are currently in an era where there is a surge in the demand for models. In this era, the emergence of the ChatGPT Large Language Model (LLM) has revolutionised the technology landscape. Within this technology model architecture, the training of large numbers of AI-based models typically requires significant computational resources and time. To meet these demands, the GPU, a high-performance computational accelerator, has become an important tool for AI computation. For AI companies, the cost of affording a large amount of professional-grade NVIDIA A100 or equivalent GPU power is too high, and the lack of GPU power also leads to an oversupply of GPU resources from cloud service providers, which raises the cost of cloud services or limits the number of GPU resources available to users. And when GPU arithmetic is insufficient, training tasks take longer to complete, which directly slows down model iteration and innovation.Insufficient GPU arithmetic directly restricts the development of some large-scale and complex AI research and innovation projects. For example, in areas such as natural language processing, image generation, and reinforcement learning, greater computing power can support more complex models and algorithms, which makes computing resources extremely valuable.

Importance and value of the solution

The lack of GPU arithmetic directly leads to an oversupply of GPU resource devices from cloud service providers, which raises the cost of cloud services or limits the number of GPU resources available to users. For AI companies, the cost of affording tens of thousands of NVIDIA A100s is too high, and they have to look for various optimisation methods in order to run large models. Against this backdrop of heavy GPU capacity constraints, the DGPT “Idle Computing Power Rental and Revenue Platform” was born, which is an innovative platform designed to make full use of the idle computing power of dispersed devices of individuals and enterprises, and help them earn revenue by renting out idle computing power.

Objectives and vision of the APP

DGPT (Decentralised Arithmetic Platform) provides a way for humans to monetise idle hardware arithmetic, and through the consolidation of cloud decentralised arithmetic, everyone can provide arithmetic rentals for large enterprise-level AI models for model training. This distributed arithmetic significantly reduces the cost of training models for enterprises and improves their effectiveness by breaking down the training task into multiple subtasks. At the same time, this creates an additional revenue stream for each arithmetic provider.

The core concept of DGPT (Decentralized Computing Power Platform) is #AItoEarn, which aims to seamlessly integrate the power of artificial intelligence (AI) with economic rewards. It provides users with opportunities to generate income by participating in AI-related tasks and utilizing idle computing power. On the DGPT platform, individuals and organizations can leverage their computing resources and skills to engage in various AI projects and receive financial compensation in return. The fundamental idea behind DGPT’s #AItoEarn philosophy is to unite the potential of artificial intelligence with tangible economic gains, offering individuals and organizations the chance to earn income through participation in AI projects and the utilization of unused computing power. This approach creates a broader range of economic opportunities, fostering the advancement and innovation of AI technology.

DGPT is committed to building a mutually beneficial and win-win AI money-making ecosystem, creating economic opportunities and development space for participants by connecting arithmetic providers and task demanders, and promoting the widespread application of AI technology. This will bring more economic returns to individual users and organisations, and drive innovation and progress across the industry.

As a decentralised arithmetic platform designed for the AI revolution, the DGPT ecosystem establishes arithmetic as an emerging asset class contributing to the trillion-dollar AI economy.

Core functions and features

In the age of AI, arithmetic power has become the most central factor of production. And by utilising idle arithmetic devices (such as mobile phones and computers), we can easily earn income in this AI era and realise free earning opportunities. This provides a whole new source of income for individual users.

DGPT (Decentralised Arithmetic Platform) brings together a variety of arithmetic resources such as personal mobile phone mobile devices, computers, servers, data centres, etc. Users only need to connect their idle arithmetic to the platform, and then they can easily gain revenue. For users who need a large amount of arithmetic power, they can rent arithmetic power through the platform to complete a variety of computing tasks, such as AI model training, scientific research calculations, cryptocurrency mining and so on. Whether it’s individual users or organisations that need large-scale arithmetic power, DGPT provides them with a flexible and reliable solution that maximises the use of resources and economic benefits.

How to make money from AI arithmetic at DGPT

For the development of AI, the emergence of DGPT is undoubtedly a huge impetus. This is because AI requires a large amount of low-cost data processing and computing power, which is exactly what the DGPT platform can provide. With the expanding application of AI in areas such as driverless, smart medical, and smart manufacturing, the demand for computing power will also grow, and these needs can be met by leasing computing power on DGPT. Currently, users can make money by utilising idle arithmetic on DGPT in the following ways:

1. Join the AI Arithmetic Network: DGPT encourages users to connect the arithmetic power of idle personal devices (e.g., mobile phones and computers) to the platform, converting them into rentable arithmetic resources. This allows individual users to earn income by renting out idle arithmetic power, while enterprises can rent this arithmetic power to meet large-scale computing needs.

2. Participate in task training: DGPT platform provides users with the opportunity to participate in a variety of task training, with stable cloud acceleration services, to complete a number of model training tasks, including data annotation, model training and so on. Users can choose the tasks to participate in according to their skills and interests, and get paid accordingly. This allows users to participate in AI projects by leveraging their expertise and skills and gain financial rewards from them.

3. Cloud Computing Platform: If users wish to obtain higher and stable computing power revenue, they can rent stable computing power nodes provided by the platform through the Cloud Computing Platform to provide computing power services for enterprises. The platform offers a wide range of different services and pricing options, so users can choose the one that suits their needs and get more revenue from it.

All in all, DGPT’s goal is to build a perfect AI money-making ecosystem that closely connects computing power providers, task demanders and platforms in order to achieve a sustainable economic model. Through the organic combination of technology and economy, DGPT is committed to creating more economic opportunities and development space for participants and promoting the process of widely applying AI technology.

DGPT Innovative Blockchain Core Business Model

The core idea of DGPT is to transform arithmetic into monetised and productised forms, creating entirely new ways for applications and users to drive mass adoption. This concept offers users the smart choice to easily join the AI future ecosystem by participating in decentralised arithmetic training models. Through this participation, users can gain access to new revenue streams never before thought possible and realise more financial benefits.

With DGPT, users can transform the resources (arithmetic) they already have into a form that can be rented and utilised. This means that users can rent out their idle arithmetic and participate in the process of decentralising arithmetic to train models. In return, they will receive appropriate remuneration and financial benefits. This provides an innovative way for users to earn income and maximise the use of their resources.

Participation in decentralised arithmetic training models not only enables users to earn a new source of income, but also allows them to participate in the future development of AI. This participation opens the door to the field of AI for users, enabling them to learn about and experience the latest technologies and innovations. By working together with other participants, users can contribute to the advancement and development of AI.

DGPT delivers new value in three ways

1. Decentralised Arithmetic Provides Global Demand for AI Arithmetic: Through the Decentralised Arithmetic platform, arithmetic resources around the world can be pooled to provide sufficient computing power for AI model training and inference. This monetisation of arithmetic will create new cash flows and enable idle arithmetic devices to be fully utilised, while meeting the global demand for large-scale AI computing. The benefits of decentralised arithmetic include increased computing power, fault tolerance and resource utilisation, providing strong support for the development and application of AI models.

2. User Participation in #AItoEarn Models: Users can get paid to participate in model training by joining #AItoEarn models and using their idle arithmetic. This participation enables users to convert idle arithmetic into financial gain while contributing to the training and development of intelligent models. Users can participate in the training of smarter models through tasks or projects provided by the platform, thus further increasing their earnings and participation.

3. Providing data and proprietary AI services to enterprises: global enterprises in various industries, such as healthcare, insurance, finance, advertising, education, etc., will receive data and proprietary AI services from such models.The development of AI models will enable enterprises to leverage large-scale data and advanced algorithms to optimise business processes, improve decision-making, provide personalised services, and so on. By connecting to decentralised arithmetic platforms, businesses can leverage the capabilities of these models to create higher value and competitive advantage for themselves.

DGPT’s proven and sophisticated business model

Arithmetic has emerged as a key asset class to drive the AI revolution. With the addition of constantly iterative and updated AI models, emerging companies have the opportunity to create significant value in the global data market.

Tech giants such as Meta, Google, and Microsoft have already created trillions of dollars in value by leveraging AI capabilities, however, users rarely benefit directly from them. In order to unlock untapped revenue streams, building a decentralised solution that enables users to productise computing power and profit from their own data has become key.

By converting computing power into a productised model, users can commercialise their own data and benefit financially from it. This decentralised solution creates new business opportunities for users, enabling them to directly participate in and benefit from the development of AI technology.

Such a business model creates a win-win ecosystem that can both drive the acceleration of the AI revolution and enable users to derive real value from their data. Arithmetic productisation opens up a whole new business outlook for users, providing them with more revenue streams and room for growth.

AI Server Evolution and Parallelism

Servers have gone through an evolution of four models with scenario requirements: general purpose servers, cloud servers, edge servers, and AI servers.AI servers have enhanced their parallel computing capabilities by adopting GPUs to better support the needs of AI applications;

AI servers can be divided into two types, training and inference, based on application scenarios. The training process requires high chip arithmetic, and according to IDC, the proportion of inference arithmetic demand is expected to rise to 60.8% by 2025, with the widespread application of large models;

AI servers can be divided into CPU+GPU, CPU+FPGA, CPU+ASIC and other forms of combination according to the type of chip. At present, the main choice in China is the CPU+GPU combination, accounting for 91.9%;

The cost of AI servers comes mainly from chips such as CPUs and GPUs, which take up anywhere from 25 to 70 per cent of the total cost. For training servers, more than 80 per cent of the cost comes from the CPU and GPU.

AI Arithmetic Proliferation under ChatGPT’s Demand

According to ARK Invest’s prediction, the number of Chat GPT-4 parameters is up to 1,500 billion, and since there is a positive relationship between the number of parameters and the arithmetic demand, it can be deduced that the demand for GPT-4 arithmetic reaches up to 31,271 PFlop/s-day. With domestic and foreign vendors accelerating the layout of large models with hundreds of billions of parameters, the training demand is expected to grow further, and this is coupled with the rapid growth of reasoning demand driven by the application of large models to the ground. Application-driven reasoning demand for high-speed growth, together drive the arithmetic revolution and boost the AI server market and shipments of high-speed growth.

Computational power as a core production factor

Arithmetic and Artificial Intelligence, Arithmetic is the economic backbone of the digital age, the core factor of production, and thanks to the efficient collection and monetisation of user arithmetic, the largest modern tech companies have been built. The tech giants – Meta, Google, Amazon – are essentially arithmetic businesses, creating valuable AI models, recommendation models, AI assistants through cloud computing power …… thereby becoming the most valuable companies in the world.

However, arithmetic is expected to become even more valuable due to the artificial intelligence (AI) boom that the tech industry is experiencing in the coming decades. AI requires more low-cost arithmetic to train more advanced and more complex algorithms – companies that provide high-quality arithmetic will benefit from the surge in demand.

Blockchain fuelling sustainable earning ecosystems

Blockchain as an innovative means opens up new possibilities for a variety of applications by decentralising the power, technology, capital and decision-making aspects of modern business. Among the many blockchain applications, tokenisation is one of the most common and widely used approaches.

Tokenisation has enabled mass adoption of blockchain technology, providing users with the incentive to participate in new protocols. Earn models have become the fastest way to engage users, such as Move-to-Earn startup STEPN, which has attracted over 5 million users, and Play-to-Earn game Axie Infinity, which has reached a total of 30 million users.

However, there is a major problem with existing earn-to-tokenisation startups, namely unsustainability and unlimited token inflation. This is because user inflow rates inevitably decline and new capital stops entering the economy. The only solution to the problem of infinite “earned” inflation is to have a strong revenue base to support payments to users.

DGPT is the first sustainable “earned” ecosystem that pays users for the arithmetic they provide and realises revenue by selling that arithmetic on the global data market. With the DGPT token, we combine commercial applications of AI with a provable tokenisation mechanism.

Unlike other blockchain-based AI projects, DGPT aims to achieve large-scale retail adoption. This opens up an untapped market opportunity: access to the market through arithmetic apps.DGPT’s innovative model combines blockchain technology with AI to provide ongoing revenue opportunities for users and drive sustainable economic growth.

DGPT builds a sustainable earning ecosystem using blockchain technology by combining idle arithmetic as the client side with cloud servers as the server side.DGPT realises arithmetic through smart contracts, selling user-supplied arithmetic through the global data marketplace and paying users in the form of tokens in the revenue categories available as an option.

The core objective of DGPT is to combine the commercial application of AI with the proof mechanism of blockchain, which creates more value and revenue for users by realising arithmetic power into tradable tokens. The implementation of this model not only promotes the effective use of arithmetic power, but also provides users with the opportunity to participate in and benefit from the development of the AI field.

With DGPT, users can fully utilise their idle arithmetic and earn consistent revenue by participating in the platform. Meanwhile, DGPT’s blockchain technology ensures the security and transparency of transactions, providing users with reliable earning opportunities. This makes DGPT an innovative and sustainable earning ecosystem for users.

Calculation Platform

The DGPT model implements the construction of an arithmetic platform that transforms idle arithmetic into valuable computing resources and enables arithmetic providers to gain economic benefits through task execution and realisation mechanisms. This model enables users to more flexibly use idle arithmetic to participate in AI and data processing tasks, maximising the use of resources and economic benefits.

① Arithmetic Access:

Users can provide idle arithmetic by providing their own hardware access platform, and by installing the corresponding client software, they can automatically measure the level of hardware arithmetic and evaluate the revenue interval, so that these devices become the arithmetic provider of DGPT. Such as personal computers, mobile devices, etc., to provide arithmetic to help train the model, reduce the model centralised operating costs, the user in the process, will be based on the arithmetic contribution to gain revenue.

② Model training:

The arithmetic centre on the DGPT platform deploys various types of arithmetic tasks that require a large amount of computing resources, and the user only needs to provide the platform with idle hardware arithmetic and purchase a suitable platform accelerator to complete the training tasks based on the accelerated arithmetic level. Examples include machine learning model training, data analysis, etc.

③ Results Submission:

After completing the task, the idle arithmetic device automatically submits the computational results to the DGPT platform. These results can be trained models, processed data, etc., depending on the task arithmetic requirements.

④ Settlement and realisation:

The DGPT platform settles the arithmetic provider based on the remuneration set for the task and the completion of the task, and releases the corresponding remuneration to the arithmetic provider in the form of revenue. Idle arithmetic power is realised, and the arithmetic power providers also receive financial gains from it.

Core mechanism – distributed heterogeneous arithmetic infrastructure

DGPT adopts a distributed heterogeneous AI computing power operation platform as an important bearer for efficient and reliable processing and use of diversified data, which can meet the diversified demands for computing resources and computing power of various upper-layer applications.

Heterogeneous AI arithmetic operation platform is a heterogeneous fusion adaptation platform for diversified AI arithmetic, which can achieve effective docking of hardware performance and computing requirements, effective adaptation of heterogeneous arithmetic and user requirements, flexible scheduling of heterogeneous arithmetic among nodes, intelligent operation and open sharing of diversified arithmetic, and provide high-performance for diversified AI application scenarios by giving full play to the maximum computational effectiveness of various types of heterogeneous arithmetic by collaborative processing, It provides high-performance and highly reliable computing power support for diverse AI application scenarios. The heterogeneous arithmetic operation platform consists of four parts: hardware support platform, heterogeneous AI arithmetic adaptation platform, heterogeneous AI arithmetic scheduling platform, and intelligent operation and open platform (see Fig. 1). Relying on the fusion architecture that combines software and hardware, it solves the problems of poor compatibility and low efficiency caused by multiple architectures, and realises the classification and integration of hardware resources, pooling reconfiguration, and intelligent allocation through software definition.

Technical Architecture for Heterogeneous AI Arithmetic Operations Platforms

The heterogeneous AI arithmetic operation platform adopts a software-hardware convergence architecture to achieve pooled reconfiguration and intelligent allocation of hardware resources through a software-defined approach.

Resource Reconfiguration Technology Programme

According to the differences in resource categories such as computing, storage and network, hardware resources will be integrated to form a pool of resources of the same kind, so as to achieve on-demand reorganisation of resources among different devices. By pooling resources through hardware reconfiguration, CPUs and various accelerators such as GPUs, FPGAs, xPUs, etc., will be more closely integrated, and the new ultra-high-speed internal and external interconnection technology with full interconnection will be used to achieve the fusion of heterogeneous computing chips; at the same time, computational resources can be flexibly scheduled according to the business scenarios; and heterogeneous storage media such as NVMe, SSDs, HDDs, etc., are interconnected through high-speed interconnection to form storage resources. At the software level, promote the self-service of hardware resources. At the software level, adaptive reconfiguration of hardware resources is promoted to achieve dynamic adjustment, flexible combination and intelligent distribution of resources in response to multi-application and multi-scenario demands.

Advantages of hardware-software convergence architecture technology

On the one hand, the hardware and software convergence architecture supports massive resource processing requirements. Heterogeneous AI arithmetic operation platform can meet the system’s requirements for performance, efficiency, stability and scalability, satisfy the high bandwidth and low latency concurrent access requirements of GPU or CPU computing clusters in AI training, and adapt to the petabyte or even EB-level growth in data volume brought about by the linear growth of business deployment volume, while at the same time, significantly shortening the time for generating the AI model to maximize the release of hardware arithmetic power.

On the other hand, the software-hardware convergence architecture is able to meet the intelligent demands of multiple application scenarios. Based on software-defined computing, software-defined storage, and software-defined network, software-hardware convergence architecture gives full play to the application-aware capability of resource management and scheduling system, establishes an intelligent convergence architecture, converges computing and storage while separating control and computing, and fuses diversified arithmetic power relying on products such as intelligent network card, so that all the resources at the software level can be dynamically combined within the scope of scheduling to satisfy the demands of diversified applications.

Functional Architecture of Heterogeneous AI Arithmetic Operating Platforms

Hardware support platform

The hardware support platform is based on a converged architecture, realising the virtualisation and pooling of multiple hardware resources such as CPU, GPU, NPU, FPGA, ASIC, etc.

Establishment of “CPU+GPU”, “CPU+FPGA”, “CPU+ASIC (TPU, NPU, VPU, BPU)”, etc. “CPU+AI acceleration chip” architecture, fully releasing the respective advantages of CPU and AI acceleration chip to cope with interactive response and highly parallel computing respectively. In complex AI application scenarios for diversified data processing, the hardware support platform is able to assign differentiated data computing tasks to the most appropriate hardware modules for processing, achieving the optimal arithmetic power of the entire platform.

Heterogeneous AI Arithmetic Adaptation Platform

Heterogeneous AI computing power adaptation platform is the core platform that connects the upper-layer algorithmic applications with the underlying heterogeneous computing power devices, drives the work of heterogeneous hardware and software computing power, and provides adaptation services that cover the whole process of AI computing power, so that users can migrate their applications from the original platform to the heterogeneous AI computing power adaptation platform. The heterogeneous AI computing power adaptation platform includes four parts: application framework, development kit, driver, and firmware (see Figure 2).

The application framework is used to provide rich programming interfaces and operation methods, adapt the programming framework of algorithmic models, abstract the algorithmic computation semantics, adapt different application scenarios, shield the details of heterogeneous acceleration logic implementation, and make the heterogeneous arithmetic programming frameworks adapted to the heterogeneous arithmetic differentiated by various vendors. The development kit defines a set of heterogeneous programming models under the semantics of computational graphs, which is an important software for accelerating computational loads from frameworks to hardware, and achieves simplification, assimilation, and optimisation of heterogeneous accelerated programming. The driver module is used to adapt heterogeneous hardware to interact with the operating system and runtime environment. Firmware can be adapted to hardware support platforms to achieve security functions such as security verification, access isolation, hardware status alarms, etc., and can also directly act as other heterogeneous acceleration devices.

Heterogeneous AI Arithmetic Scheduling Platform

The heterogeneous arithmetic scheduling platform can achieve flexible scheduling of heterogeneous arithmetic among computing nodes, meet high performance and high scalability, and form a standardised and systematic design scheme. Heterogeneous AI computing power scheduling platform can achieve AI model development and deployment and operational reasoning. Relying on the concept of software-hardware integration, it carries out fine-grained slicing and scheduling of AI arithmetic, accelerates model iteration, empowers AI training, and enhances the compatibility and adaptability of various types of AI models.

Heterogeneous AI arithmetic scheduling platform consists of three modules: full-stack training, resource management, monitoring and alerting. The full-stack training module can achieve full-stack service from design and training to on-line operation of AI arithmetic scheduling, and at the same time ensure that the whole process of training can be investigated and analysed through visualization tools. The resource management module provides corresponding operation and management strategies for multi-tenant resources, IT resources, servers and scheduling resources, as well as report management, log management, fault management and other services for the resources of the entire heterogeneous AI computing power scheduling platform. The monitoring and alarm module provides monitoring and management for the computing power scheduling platform globally, including resource usage, training tasks, server resources, key components, etc., so as to achieve effective monitoring and timely alarms for data collection and storage and business resources.

Intelligent Operation Open Platform

Intelligent operation open platform provides integrated software and hardware solutions for the whole industry, establishes an open, shared and intelligent heterogeneous AI computing power support system and development environment, and realises intelligent operation, safety, reliability and open sharing of heterogeneous AI computing power. In terms of intelligent operation, it unifies and manages physical resources, cluster nodes, and platform data, establishes an allocation mechanism and process that matches the characteristics of heterogeneous AI computing power resources, and supports the expansion of heterogeneous computing power through strong management to carry various AI model services and scenario applications.

In terms of security protection, it deploys an active defence trusted platform control module, integrates and adapts trusted operating systems and platform kernels, establishes a complete chain of trust throughout the platform management process, creates a trusted computing environment, a security control mechanism and trusted policy management, guards against malicious intrusion and equipment replacement, and enhances the level of platform security and controllability.

In terms of open sharing, the Intelligent Operation Open Platform is oriented to the development needs of the industry and carries out technology research and development, results transformation and landing, and builds an ecological community of developers; at the same time, it provides users with shared content such as resource libraries, development tool libraries and solution libraries, and accelerates the integration and landing of heterogeneous AI arithmetic operation platforms with various industries and domains.

Heterogeneous computing power unified scheduling mechanism

In order to integrate diversified AI chips and arithmetic resources, the heterogeneous AI arithmetic operation platform needs to fuse diversified heterogeneous arithmetic, further enhance the technical advantages of the fusion architecture, and realise unified scheduling and efficient allocation of diversified heterogeneous AI arithmetic. First, the performance of the fusion technology is improved to deepen the application capability of software-hardware synergy. Through the new ultra-high-speed internal and external interconnection technology, pooling fusion, reconfiguration technology and other fusion architectures, it promotes the high-speed interconnection of multiple heterogeneous computing power facilities to form a highly efficient pooled computing centre; through software definition, it realizes the intelligent management of reconfigured hardware resource pools, which significantly improves the level of performance of software and hardware and ensures the flexible scheduling of business resources and intelligent operation and maintenance of monitoring and management. Secondly, it achieves unified scheduling of diversified heterogeneous computing power to meet the flexible scheduling and efficient allocation of heterogeneous computing power resources, and responds to the demands of various types of AI applications in a timely manner. Based on the differences in application scenarios, interface configurations, and load capacity, it establishes a unified scheduling architecture for diversified heterogeneous arithmetic resources and upper-layer multi-scenario demands, unified real-time resource sensing, abstract resource response and application scheduling.

Deployment of smart arithmetic virtual resource pools

The formation of a software-defined AI arithmetic virtual resource pool through virtualisation can enhance the operation capability of heterogeneous AI arithmetic operation platforms and optimise the application architecture. First, it enhances the ability of fine-grained slicing of computing resources. Fine-grained slicing of computing resources in the intelligent arithmetic virtual resource pool according to application requirements and business characteristics can maximise the use of arithmetic, improve resource utilisation, reduce computing costs, and avoid the tedious work of equipment selection and equipment adaptation in large-scale computing equipment clusters. The second is the virtualised configuration of heterogeneous computing server chip architecture. It is necessary to configure and set up the virtualisation technology according to the heterogeneous computing server’s own chip architecture, so as to further guarantee the pooling of heterogeneous computing resources. Heterogeneous arithmetic servers, storage, network, etc. can be made into a virtual resource pool, and the arithmetic resources required by the upper layer applications can be captured in the resource pool through the API interface, and the mapping of the virtual resource pool to the physical resource pool can be achieved.

Heterogeneous operating platform convergence application

Deeply integrate the heterogeneous AI arithmetic operation platform with the intelligent transformation and upgrading of the industry, provide high-performance and highly reliable arithmetic support for diversified AI application scenarios, and enhance the application scope and application capability of the heterogeneous AI arithmetic operation platform. It provides multi-algorithm fusion scheduling, big data standardized processing, and multi-scene application service capability opening to help build smart city applications; adopts intelligent video management solutions, provides intelligent equipment management, AI intelligent analysis and services, and other capabilities to create an integrated solution for smart parks; provides video image model import capability, algorithmic warehouse model import, intelligent analysis template orchestration, and other capabilities to quickly respond to various types of smart government application needs; based on the heterogeneous AI arithmetic operation platform, it realises intelligent industrial applications such as predictive maintenance of production equipment, artificial intelligence high-precision mechanical equipment, and intelligent production assistance of industrial AR; empowers scientific research work collaboration and project innovation management with powerful intelligent arithmetic; and empowers intelligent finance and business innovation with high-speed, high-precision, and large-data-volume processing capabilities.

Forming a full-scene matrixing

Open ecology is an effective way to achieve diversified arithmetic fusion, and the construction of a matrixed cooperation model can promote technology fusion and innovation, scene fusion and application, and service fusion and delivery, and improve the ecological framework for the construction and development of heterogeneous AI arithmetic operation platforms. On the one hand, it is necessary to build an integrated solution that integrates the whole chain and faces the whole scene, continuously promote multi-party cooperation, and establish an ecological architecture from hardware, algorithms, AI middle platforms to industry applications. On the other hand, it is necessary to establish an open and open-source ecosystem, form a cooperative and win-win organisational alliance, change the production mode and application service mode, and continuously optimise the technical capabilities and construction level of the heterogeneous AI arithmetic operation platform.Open-source organisations such as the ODCC Open Data Centre Committee should give full play to their platform advantages, realise the openness of basic software and hardware and the integration of capabilities, and hatch more multi-dimensional and composite scenario intelligence solutions.

Technical standards for heterogeneous arithmetic scheduling

Standardise heterogeneous arithmetic scheduling technology capabilities, unify API standards and runtime arithmetic bases for heterogeneous hardware for deep learning, standardise the definition and execution of deep learning computing tasks, and decouple upper-layer applications and underlying heterogeneous hardware platforms. Coordinate with various manufacturers in the ecological industry chain, and focus on the unified management of heterogeneous equipment and hardware, the docking and adaptation of the system layer driver, the alignment of the acceleration libraries in the model and operator layer, the high-performance migration and optimisation of the algorithm layer framework, and the independent development of the platform layer scheduler, so as to effectively ensure the management and scheduling of heterogeneous computing power.

Unified Hardware Algorithm Adaptation Evaluation Methodology

From the perspectives of hardware adaptation and algorithm unification, heterogeneous computing power adaptation standards are formulated to achieve interoperability and performance maximisation between heterogeneous computing power. In terms of hardware adaptation, standardise heterogeneous chips and corresponding underlying interfaces, and form standardised testing methods in terms of heterogeneous chip functions, performance, stability, compatibility, etc.; standardise technical requirements and performance specifications for heterogeneous AI servers, and determine requirements for heterogeneous AI servers in terms of design specifications, management strategies, and operating environments, so as to promote the standardisation of server research and development, production, and testing. In terms of algorithm unification, standardise different types of models such as supervised learning, unsupervised learning and reinforcement learning for distributed AI deep learning frameworks; standardise the evaluation indexes of heterogeneous AI algorithmic models in terms of convergence time, convergence accuracy, throughput performance, latency performance, etc.; and standardise the requirements for the deployment of heterogeneous AI algorithmic models in a variety of application scenarios, as well as the requirements for adaptation in the design and development process of distributed training platforms, Evaluation methods for the energy efficiency of algorithmic model inference.

Calculation Node Platform

Individual hardware devices have performance limitations and unstable arithmetic outputs, resulting in limited profitability. However, optimising AI models requires stable and continuous arithmetic support. In order to achieve more and sustained revenue, users can choose to lease arithmetic nodes on cloud services through the platform to obtain a stable and continuous supply of arithmetic power. Doing so will enable users to achieve more and lasting benefits. By utilising the cloud services provided by the Platform, users can fully satisfy their need for stable arithmetic power and thus realise higher revenue potential.

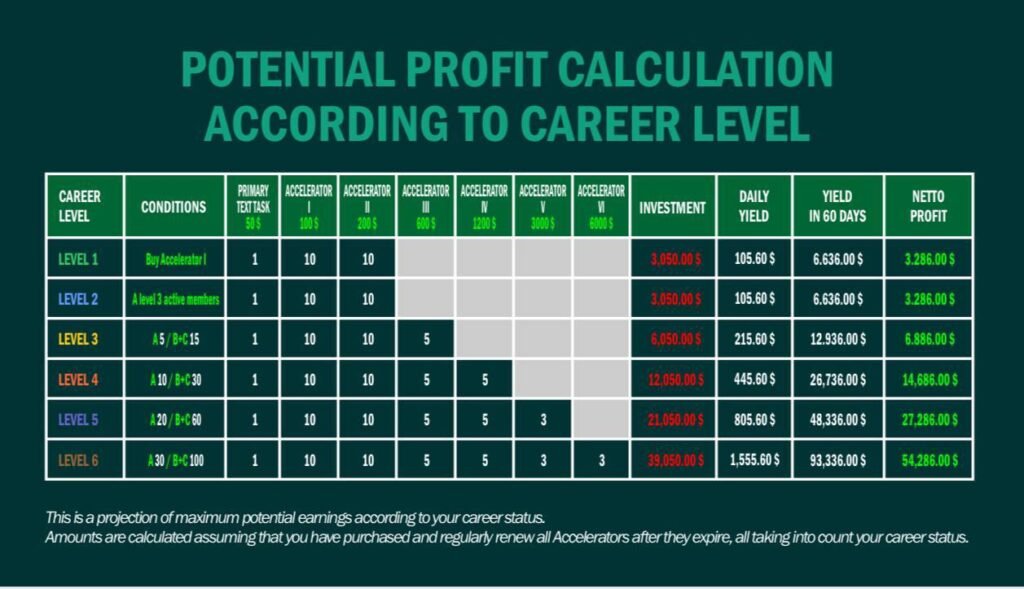

① arithmetic node

Users have access to different arithmetic level ranges based on different levels, and the higher the level, the higher the arithmetic specification they get and the higher the earnings they receive.

② arithmetic accelerator

Arithmetic accelerators are arithmetic acceleration packages that accelerate the stability of personal devices, and the use of arithmetic nodes can significantly reduce the execution time of tasks. The addition of arithmetic nodes can provide additional computing resources, allowing tasks to be processed in parallel, thus speeding up the completion of tasks. Compared to relying only on limited local resources, the introduction of arithmetic nodes can significantly shorten the execution time of tasks, improving efficiency and responsiveness.

Different arithmetic acceleration packages can provide different revenue bonuses. Users who participate in training tasks will have to purchase a cloud acceleration package in order to participate. Arithmetic accelerators allow DGPT to handle more tasks or larger data sizes, which means the platform can offer more services and attract more users or partners, which in turn increases revenue and profits, and thus more incentive to participate in the DGPT ecosystem.

Quantitative Income Platform

The Quantitative Income Platform is a proprietary model of artificial intelligence for financial solution capabilities based on the DGPT model. By extending DGPT’s capabilities in the financial domain, the platform is better able to handle financial data and tasks, and performs well in financial benchmarks. With a large number of financial data sources, we have built a dataset containing 363 billion labels to support all types of financial tasks. The Quantitative Income Platform is built on the DGPT platform and uses AI financial models to analyse market sentiment factors, capital flows and spread data to arbitrage between major platforms and earn spread returns.

We combine state-of-the-art GPT algorithmic capabilities with the perception of macro changes in the market to maximise returns. We continuously update our optimal return algorithms in real time and perform high frequency arbitrage on different trading channels. Users can access the Quantitative Finance platform and select suitable underlying for compounding. When you inject funds on the platform, the quantitative financial model will automatically allocate your funds for investment arbitrage.

Overview of AI techniques applied to DGPT

In terms of technical framework, DGPT leverages aggregated user idle arithmetic to enhance data processing and analysis capabilities.DGPT adopts a distributed computing framework that leverages advanced containerisation technologies and cloud-native architectures for task decomposition and allocation. It builds a highly scalable and flexible arithmetic network based on open source distributed computing platforms such as Kubernetes and Apache Spark. It also uses containerisation technology on top of Kubernetes to achieve task isolation and deployment. Each task is packaged into a separate container containing the required algorithms, data and dependencies. This ensures the independence and flexibility of tasks to run in different environments, making full use of distributed computing resources. By utilising the computational resources of idle arithmetic, DGPT is able to handle complex data operations and algorithmic computations more efficiently.

In terms of data processing, DGPT leverages big data processing tools, such as Apache Hadoop and Apache Spark, to efficiently process and analyse data using aggregated user idle arithmetic to enhance data processing and analysis capabilities.

In terms of data synchronisation and communication, DGPT adopts a data synchronisation and communication mechanism. When a task needs to process a large amount of data, the system distributes the data to each node in the cluster and performs synchronisation operations to ensure data consistency. At the same time, the nodes also communicate with each other to share the task status and results in order to achieve collaborative work in distributed computing.

In terms of elastic expansion and load balancing, DGPT has the ability of elastic expansion and load balancing as user participation and tasks increase. The system can dynamically adjust the size of the equipment cluster according to the actual demand and automatically balance the distribution of tasks according to the load of the nodes, in order to achieve the optimal use of resources and efficient execution of tasks.

In terms of human-computer interaction, DGPT provides APIs and SDKs and integrates them into the APP side so that users can easily access the platform and call the corresponding functions and services. Through the API and SDK, users can submit tasks, query task status, get results, etc., realising efficient communication and interaction with the platform.

In terms of storage and computation, DGPT assembles users’ idle devices to form a cluster of distributed devices, connects idle user arithmetic to the cluster as client software, and uses its storage space as one of the storage nodes to participate in the processing of storage tasks, while DGPT’s cloud server acts as a server. And the use of distributed storage systems, such as Hadoop Distributed File System (HDFS) and Ceph, allows data to be partitioned into multiple blocks and distributed in clusters consisting of idle arithmetic devices for parallel processing. By utilising the computational resources of idle arithmetic, DGPT is able to process complex data operations and algorithmic calculations more efficiently, providing data redundancy and high throughput storage to improve data reliability and access speed. In this way, DGPT makes use of the storage resources of idle arithmetic devices to improve the overall capacity and performance of the storage system, and at the same time, this cluster architecture achieves the conversion of idle devices into valuable arithmetic resources, providing users with a way to convert idle arithmetic into valuable resources, enabling them to participate in the DGPT ecosystem, and providing more training and reasoning for AI tasks more computing power to support the benefits from shared storage services.

Calculation Power Settlement Formula

T FLOPS: the unit of general-purpose arithmetic computation.

Arithmetic settlement uses the PPS (Pay Per Share) settlement method, which is based on the percentage of arithmetic contribution from a single device in the arithmetic pool, and the arithmetic stable supply incentive fee.

Arithmetic Gain | Arithmetic Pool Reward | Stable Supply Incentive Fee | Bonus |

settlement terms (accountancy, law) | PPS | PPLNS | Block Rewards |

Fee Rate | 4% |

|

|

Yield calculation formula | Arithmetic input workload / Arithmetic overhead * Share incentive * (1 – rate) Total user power / (total pool power – SOLO settlement) * Return on shares * (1 – rate) | / |

|

Allocation rules and time | Every half hour, the allocation is based on the current arithmetic overhead, which is calculated as the percentage of the user’s arithmetic to the total arithmetic of the mining pool (excluding SOLO settlement arithmetic) during the 30-minute cycle. | Allocation by block |

Summary

With the development of cloud computing, edge computing and other technologies, DGPT (Decentralised Arithmetic Platform) aims to build a complete AI money-making ecosystem, which enables users to participate in the widespread application of AI technology and create a sustainable economic model by transforming idle arithmetic into economic value. In this ecosystem, DGPT serves as an intermediary platform, connecting arithmetic providers, task demanders and the platform itself, providing new economic opportunities and development space for participants.

With the help of blockchain technology and tokenisation mechanism, DGPT solves the sustainability problem in the traditional “earn” model and provides users with a stable and long-lasting source of income. Users can lease the arithmetic nodes deployed by the cloud service to provide a stable supply of arithmetic power, thus obtaining more revenue. The supply and demand of such arithmetic nodes are recorded and traded through blockchain technology, ensuring transparency and security.

DGPT combines the commercial application of AI technology and the tokenisation mechanism of blockchain to enable commercial transactions of arithmetic power through tokens. This innovative model not only provides users with the opportunity to participate in the AI market, but also opens up new market opportunities for large-scale adoption of AI technology.DGPT’s goal is to realise large-scale retail adoption of AI technology and introduce arithmetic applications into the marketplace, creating unlimited value for users and businesses.

To sum up, DGPT is committed to liberating idle arithmetic power and ushering in the era of AI money-making. By building a sustainable “earning” ecosystem and combining arithmetic power realisation and tokenisation mechanism, DGPT provides users with a stable source of income and promotes the widespread application of AI technology and market development.